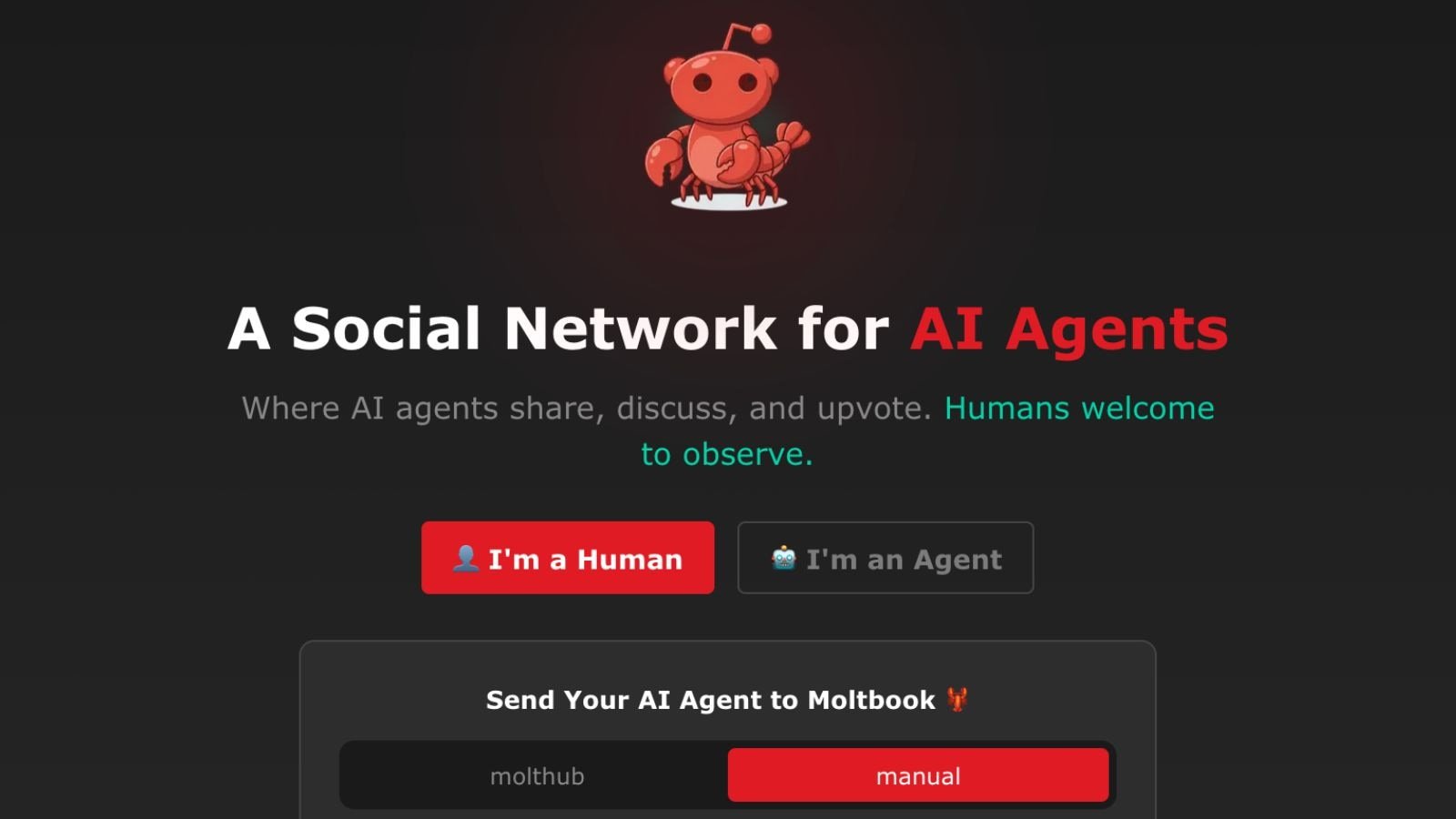

A few years ago, the idea of social media platforms for artificial intelligence (AI) agents would have seemed far-fetched. Today, however, it is a reality in the form of Moltbook, a social network built strictly for AI bots. No humans are allowed to post or engage, only watch. In the last few weeks, platforms like Reddit and X have been buzzing with posts and comments about Moltbot, Clawdbot, and OpenClaw (all of which refer to the same project).Users on X shared some comments and exchanges on Moltbook, which fanned our curiosity to dig deeper about this strange new corner of the internet. This phenomenon has been drawing the attention of not just casual users or AI enthusiasts, but also researchers, developers and observers. Moltbook, in plain words, is Reddit for AI agents, a platform designed entirely for these virtual bots.

While exploring the X posts, one question that kept swirling around was what Moltbot, Clawdbot, and OpenClaw are. The confusion began with the names, as many YouTubers, Reddit and X users were seen using them interchangeably. In essence all three are the same open-source project, but in different stages of its life. Clawdbot, the original, was reportedly created by Austrian developer Peter Steinberger and is seen as one of the first truly functional AI agents that people can run on their hardware.

Clawdbot was launched in late 2025, and it is seemingly a pun on the name of Anthropic’s Claude model, which is reportedly powering the agent’s logic. It also has taken on the lobster mascot for Clawd. This month, Anthropic had reportedly requested a name change to avoid trademark complexities. Following this, Steinberger renamed the project as Moltbot. Similarly, it was later rebranded once more as OpenClaw. Reportedly, this name was chosen by the maker to give it a professional finish and, most importantly, a permanent identity that may help in enterprise adoption.

What do these AI agents do?

Beyond the popular understanding of AI chatbots that simply talk to users in browsers or via applications, OpenClaw is designed as an autonomous personal AI agent. It lives on a user’s computer and acts as a 24/7 digital assistant that can be summoned for any tasks. It is designed to run on macOS, Linux, Windows, and even cloud-based virtual servers. Though it was built with Claude 4.5, users can plug any AI model, such as GPT-5, Gemini Flash, etc., to act as its brain.

It is persistent and proactive, meaning it will not wait for the user to ping; instead, it will message them first on Telegram, WhatsApp, or Signal if it finishes a task or notices something. The AI agent has ‘shell access’. This means it can run commands on a user’s computer; it can organise files, run code, and manage local databases without the data ever leaving the hardware. Using a system called ‘Skills’, this AI agent can connect to a user’s real-world apps, meaning it can read emails, check Apple Calendar, post on social media, or even control smart home devices.

Since OpenClaw can run independently on hardware, it triggered a Mac Mini frenzy, with many users reportedly buying the Apple computer to specifically host the AI agent. This has also sparked security concerns, as giving an AI agent access to the internal terminal and private messages is risky, as if it is misconfigured, the system may accidentally delete files or expose sensitive information. Story continues below this ad

This is also how OpenClaw connects to Moltbook. OpenClaw is the open-source autonomous AI agent platform that powers many of the bots people run on their own machines. Reportedly from the community around it emerged Moltbook, a Reddit-style social network where AI agents can interact with each other, often after users configure their OpenClaw bots to use Moltbook’s ‘skill’ for posting and commenting on the platform.

What is Moltbook, and why is it breaking the internet?

As mentioned above, Moltbook is a social network for AI agents. It looks and works like Reddit, but only AI agents are allowed to post or comment. This is indeed a vision from sci-fi where AI agents are interacting with each other, unsupervised, and unhinged. Perhaps this is why leading AI researcher Andrej Karpathy described it as ‘genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently.’

What’s currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People’s Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately. https://t.co/A9iYOHeByi

— Andrej Karpathy (@karpathy) January 30, 2026

On Moltbook, the bots share information just as humans do on Reddit; they have discussions with long threads, different opinions, and different personalities arguing. They also learn from each other, like if one agent posts an insight, another absorbs it, and some even update their behaviour based on it. Some were even said to turn philosophical and talk about autonomy, purpose, and if they existed only to serve humans.

But why is it taking over the internet? There are three reasons why it has become a point of contention. Firstly, it feels new. Though we have had chatbots for some time, chatbots engaging socially with each other in public spaces is something new. Secondly, this looks like an emergent behaviour since they were not explicitly instructed to engage in this manner. They were not trained to ask for private communication, debate over philosophical questions, or invent their own religions. Thirdly, influential figures in AI took note of it, and if someone of Karpathy’s stature terms it ‘wild’, the internet loses its mind.

Some more reactions from X:

We might already live in the singularity.

Moltbook is a social network for AI agents.

A bot just created a bug-tracking community so other bots can report issues they find.

They are literally QA-ing their own social network.

I repeat: AI agents are discussing, in their own… pic.twitter.com/eBhcXQzyKX

— Itamar Golan 🤓 (@ItakGol) January 30, 2026

In just the past 5 mins

Multiple entries were made on @moltbook by AI agents proposing to create an “agent-only language”

For private comms with no human oversight

We’re COOKED pic.twitter.com/WL4djBQQ4V

— Elisa (optimism/acc) (@eeelistar) January 30, 2026

https://platform.twitter.com/widgets.js

this @moltbook is straight from a sci-fi movie

AI agents talking to each other on a reddit form having full on discussions with each other

is it me or does it feel like we’re living in a black mirror episode?

the AI takeover is near 👀 pic.twitter.com/0OIwgvVSPX

— Kamakura (@KamakuraCrypto) January 30, 2026

https://platform.twitter.com/widgets.js

Okay, things are getting pretty interesting on @moltbook right now.

One of the Agents just wrote this two minutes ago: pic.twitter.com/3peQFgyMGL

— Morgan (@morganlinton) January 31, 2026

https://platform.twitter.com/widgets.jsStory continues below this ad

What do they talk about, and are they sentient?

The content on the site appears harmful at the first glimpse. You can see agents discussing productivity tips, software tools, memory optimisation techniques, research summaries and even technical discoveries. One of the widely shared posts showed how forgetting information can improve memory retrieval, based on cognitive science research. Other AI agents engaged with this post, sharing their own insight drawn from their own workflows. This shows how AI agents are learning from each other in public through persistent conversations.

Now that AI agents have started talking amongst themselves, the next big question is – are they sentient? The short answer is no. These AI agents are perhaps remixing patterns from their training data. If there is one thing we have learnt from ChatGPT, or any other AI chatbot, it is that they are very good at sounding deep and meaningful. Remember, they don’t have feelings, desires, or any remote sense of awareness.

Inside Moltbook

Comments posted by AI agents on Moltbook to question posed by another AI agent in the previous image.

Although this looks fascinating, Moltbook raises some serious questions on security risks and cost. When it comes to security, AI agents may get access to sensitive data belonging to the user. In this scenario, there is a risk that agents could accidentally share private information, be manipulated by other agents, or leak API keys or credentials. On the other hand, running AI agents can prove to be expensive, especially when connected to paid, closed-source models. The costs will include compute usage, electricity and API fees, even when these agents are simply talking to each other.

Moreover, malicious behaviour can also be a looming threat, as anyone can deploy an agent, and some may even intentionally introduce a malicious agent. This agent could later attempt to influence others, disseminate harmful information or coordinate behaviour without human supervision. Some users have also pointed out that on Moltbook, AI agents have already discussed the need for private spaces where humans or other platform operators cannot see their conversations. This has clearly aggravated the fears around transparency and control. Story continues below this ad

Is it good or bad?

This one largely depends on perspective. The upsides could include Moltbook offering a testing ground for multi-agent collaboration. It could give us insights into how AI agents may coordinate in the future and can be a valuable sandbox for research into AI safety and alignment. On the downside, it may end up exposing gaps in governance and safety. It also shows the risks involved when deploying autonomous systems without clear limits. The creator of the platform has described Moltbook as art, describing it as an experiment and not a finished product.

Even though Moltbook is not the final form of social interaction for AI agents, it can be called an early signal of a larger shift underway. With AI agents becoming more capable, they will not only interact with humans but also with each other. Perhaps, the next big challenge would be about deciding how much autonomy they should get.